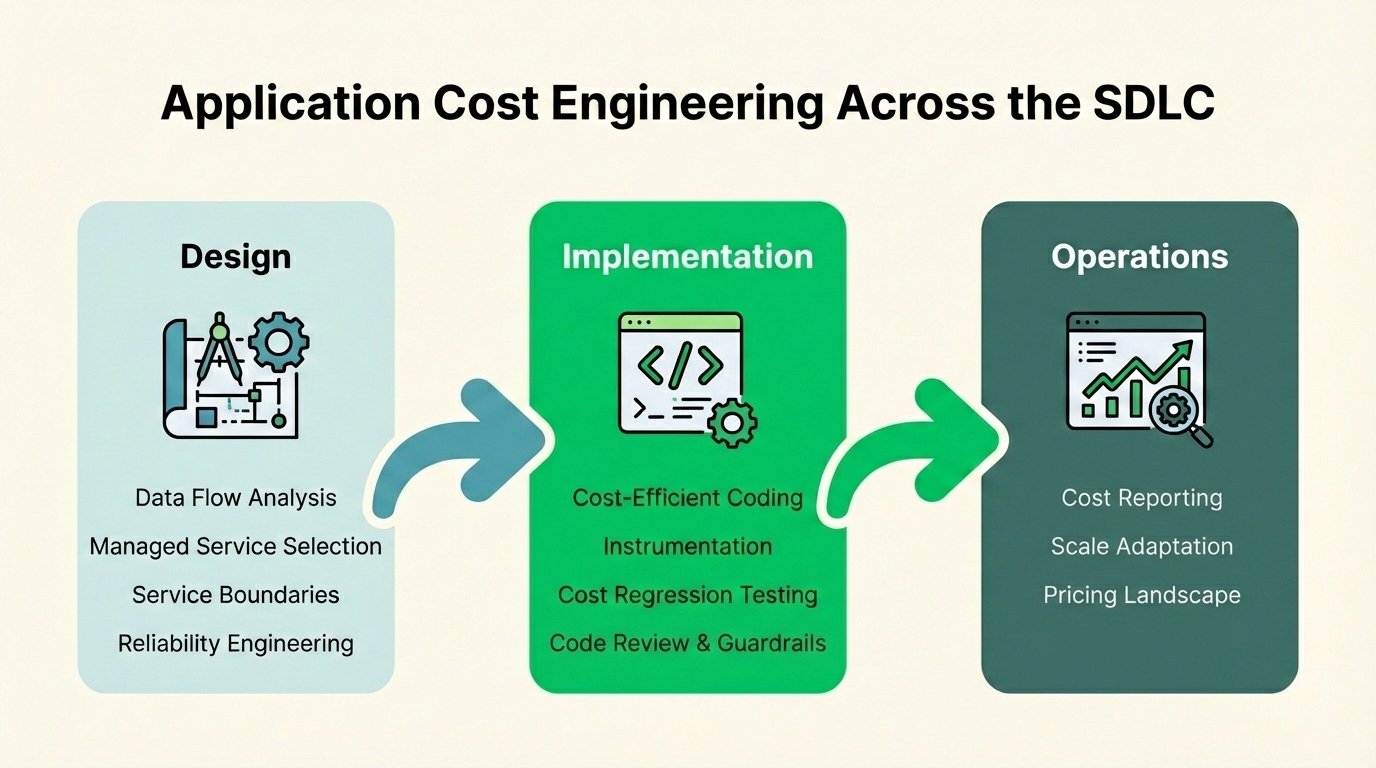

Application Cost Engineering is the practice of designing, implementing, and operating software systems with cost as an explicit, measurable dimension of quality. It treats cost the same way engineering teams already treat performance, reliability, and security: something to model early, validate during development, and monitor continuously in production.

Why It Matters

By the time code reaches production, the most impactful cost decisions have already been made. Architecture choices, service selections, and code patterns establish cost curves that persist for years. Just as we've learned for quality, security, and performance, building in cost efficiency beats firefighting to reengineer systems when issues surface late. Cloud spend is one of the fastest-growing cost categories for most organizations, and product teams increasingly need unit-level cost metrics, such as cost per customer and cost per transaction, to understand COGS, inform pricing, and forecast how costs will scale with usage. Even if engineering for cost is not a priority this quarter, it tends to become one, eventually.

Relationship to Traditional Cloud Cost Optimization

Application Cost Engineering is complementary to traditional cloud cost optimization. Traditional cloud cost optimization operates at the infrastructure layer and is focused on rate and workload optimization through things like savings plans, rightsizing, unused resource cleanup. Application Cost Engineering goes beyond to address the layer above: architecture and code paths where cost behavior is also determined, and where traditional optimization doesn't reach.

1. Design and Architecture

Before code exists, teams model the cost characteristics of proposed designs. The goal is identifying cost drivers and understanding growth patterns. Precise forecasting usually can't be achieved at this stage (there are too many unknowns), but the main costs in the system and their scaling factors can be determined and can influence the design.

Throughput and volume modeling. Before writing code, estimate how much data moves between components—and how those volumes change as the system scales. Identify fan-out points where one input triggers many downstream operations. Flag unbounded behaviors that grow without limits. These estimates inform foundational architectural decisions: event-driven vs polling, batch vs streaming ingestion, multi-tier storage vs single-tier retention, compression and deduplication strategies.

Managed service selection. For message streaming, do you choose AWS Kinesis, AWS MSK, AWS MSK Serverless, Confluent Cloud, or self-managed Kafka? Each has a different cost structure: shard-based pricing, broker instance hours, throughput-based tiers. The decision made at design time locks in component architectures, control flows, and implementations that are difficult to reverse. The same applies to databases, caching layers, ML inference endpoints, and logging backends. These aren't purely technical decisions—they're economic commitments. Before finalizing service choices, architecture reviews should include questions like "what does this cost at 10x scale?" and "what does this cost at 0.1x scale?" The answers often diverge more than expected.

Service boundaries. How you draw boundaries between services determines how granularly you can measure, attribute, and optimize costs. Monolithic architectures make it difficult to isolate which functionality drives which spend. Well-defined boundaries let you track costs per capability, identify which features justify their resource consumption, and optimize components independently. This doesn't require microservices—it requires intentional decomposition that aligns with how you want to measure and manage cost.

Reliability engineering. There's a close relationship between cost engineering and reliability engineering. Defining system behavior under heavy load (usage limits, rate limits, load shedding) has both cost and reliability implications. A system without these controls can fail under load or generate runaway costs. A well-designed cost plan addresses both concerns together.

These decisions (architecture, managed services, service boundaries, capacity safeguards) establish the economic foundation of the system.

2. Implementation and Validation

After the architectural choices have been made, the implementation of application code directly drives costs. Code interacting with an observability platform, an AI model, or a managed queue is generating spend with every call. Infrastructure choices matter too: instance sizing, auto-scaling policies, and network topology all affect the bill. If code and infrastructure aren't configured for cost efficiency (which is easy to get wrong), the system is wasting money.

Cost-efficient coding. Efficient algorithms reduce compute time, optimized database queries avoid expensive table scans, and care with how the disk is accessed keeps threads free. Beyond these essentials for cost efficiency, modern cloud applications tend to rely on many managed services. Every managed service has cost-efficient usage patterns, and cost traps that are easy to fall into. Teams need to use these services carefully: batching where possible, avoiding verbose logging, bounding retries and queries, selecting appropriate model tiers, minimizing data movement. The specifics vary by service, but the principle is consistent: code that ignores cost efficiency will generate waste at scale.

Writing cost-efficient code isn't sufficient on its own. Teams need mechanisms to verify efficiency is maintained.

Instrumentation. Provides visibility into cost origins. This means metrics for request volumes and payload sizes, traces enriched with cost-relevant attributes, logs that surface costly operations. Consistent resource tagging—by service, feature, environment, or team—provides the infrastructure-level attribution that complements application instrumentation, enabling cost data from cloud bills to be mapped back to the code and teams responsible. Together, application-level and infrastructure-level instrumentation let you map costs to specific services, features, tenants, and lines of code—linking your cloud bills deep into your application so that you can gain a detailed understanding of where cost is being generated.

Cost regression testing. Catches problems before merge. Cost can regress like latency or reliability. A change might introduce accidental fan-out, expanded logging, larger payloads, more expensive queries, or retry behavior that multiplies spend. For example, a CI check might run integration tests that count external API calls and fail if the count exceeds a baseline—catching a change that accidentally calls an LLM in a loop. Teams treat cost regressions with the same rigor as performance regressions.

Cost-aware code review and guardrails. Changes that affect cost-sensitive paths (logging, external API calls, data processing loops) require explicit review. Automated checks can flag patterns known to cause cost regressions before they reach production.

3. Operations and Continuous Optimization

Once code is in production, cost engineering becomes an ongoing practice with three components.

Regular cost reporting and remediation. Teams review application-centric cost reports that attribute spend to features, services, and code paths. When cost is unaligned with value, such as a feature consuming disproportionate resources relative to its importance, remediation becomes a priority. Anomaly detection surfaces unexpected cost changes—a sudden spike in API calls, an unexpected increase in storage growth, or a gradual drift from baseline. Timely alerts help engineering teams isolate the cause while recent changes to infrastructure-as-code or application code are still fresh, rather than investigating weeks later when the trail has gone cold.

Adapting to scale changes. What was cost-efficient at one scale may not be at the current scale. The messaging platform selected at 1,000 messages/second may not remain economical at 100,000 messages/second. A logging backend that worked for a small team becomes expensive at terabyte-scale ingestion. Regular reassessment ensures architectural choices remain appropriate as the system grows or contracts.

Adapting to the pricing landscape. The market doesn't stand still. New options emerge: features in existing services that reduce costs but require explicit adoption, or new alternatives with more favorable pricing for your workload. AI models are a clear example: newer models often deliver equivalent capability at lower cost, but migration is required to capture the savings. Teams that track these changes and act on them maintain cost efficiency over time.

The end state is a system where cost is as observable and manageable as latency or error rates: something monitored and tuned continuously, not addressed reactively.

Postscript: How Frugal Helps

Application Cost Engineering is a practice, not a product. But building the required infrastructure from scratch—instrumentation, cost attribution pipelines, regression testing, monitoring—requires significant investment.

Frugal accelerates Application Cost Engineering adoption. The platform maps cloud spend to application code, identifying the services, features, and code paths driving cost. It generates actionable recommendations that connect billing data to the engineering decisions behind it.

For teams beginning with Application Cost Engineering, Frugal provides the visibility layer: which code paths are expensive, which managed services are disproportionately costly, where optimization opportunities exist. For teams already practicing ACE, Frugal provides continuous validation as systems evolve.

The goal is giving engineers the data needed to make cost-informed decisions throughout the SDLC, from design reviews through production operations.

Ready to try Frugal? Book a Demo